A picture instead of million words

Extending events

So how to handle reset() or freeze()/unfreeze() of worldview. ISensors method reset() is not enough as we may need to just fade out and moreover reset() really means “synchronize with logic” so the name is wrong. It should be something like “doLogicEnded” or “worldViewUnfrozen” so the sensor module can listen for it / do something as this event arises. Thus we should provide such methods in general and let people listen for them soing whatever they need when such event is received.

Thus we should implement more events in core in general. And we need probably new layer for this virtual events / virtual commands.

MODULES - LAST PROPOSITION

Memory modules will be only simple - almost just a sensors. They will work in sync with doLogic() methods. “Memory” will registers various listeners to WorldView and store events between doLogic() calls.

A) doLogic is called B) doLogic is running and querying memory modules which are frosen C) doLogic ends D) memory modules are cleared and new events that came during A/B/C are commited to the modules E) goto A

We're assuming we have only SyncThreaded bot (no ASync threaded bot)

interface ISensoryModule { public void reset(); // this will wipe the sensory readings in the module }

ISensoryModule.reset(); //will be called in D before the WorldView is "resumed" (not to wipe fresh informations).

This leads to the problem that we need registerModule() method that will allow user to subscribe his/hers module.

BOT types

1) event-driven bot … has listener on END message that starts the doLogic, doLogic will be at first implemented by us and will check whether last doLogic didn't took too much time 2) concurrent bot … has second thread must have LockableWorldView!

Body module

Questions: ergonomy of names 1) you don't know what you need and want to seek body.simple.locomotion.move() 2) or you know what you're doing and have just loc.move() or body.move()

interface SimpleModyle interface AdvancedModule extends SimpleModule (only if viable)

IMap, IPathPlanner, IPathExecutor

Executor —- calls —→ PathPlanner —- uses information from —→ Map

interface IMap { // already done I think as a specific type of event } interface IPathPlannner { public void getPathTo(ILocation location); } // can be GameBots / Floyd-Warshall / A * / etc. // two types of IPathPlanner ... sync (A *, etc...) / async (GB)

interface IPathExecutor { public void goTo(ILocation location); }

- stricly event based - produce events for everything (path started, path can't be obtained, path broken, location reached, etc. … doesn't matter whether it uses sync path planner or async path planner

IPathExecutor register itself to WorldView watching for events that needs to be handled (e.g. steering / dodging ~ hear noises…)

IPathExecutor defines new events (is source of events): path broken / location reached

We should have also path executor decorators like “InterruptiblePathExecutor” that will watch for user-sent commands RunTo and TurnTo and stops itself upon such action.

RayTracingManager

Implements: ISensoryModule Upon calling reset() clears sensory readings of all rays it has

Methods:

interface IRayTracingModule { IRay addRay(way, distance) // returns IRay object that represents the new ray sensor removeRay(IRay ray) clearRays() }

interface IRay { boolean get() // whether the ray bounces AutoTraseMessage getMessage() // details of the sensor reading }

Interface IPickupableObject

has own id has type extends InfoObject ? why? IInventory object in the base … creates new type of event: pickup (lost?)

GesturesManager

has 2 binary connection to GameBots. Through this connection it can trigger animations for the bot. generate event BMLAct finished. it can also sends regular GameBots commands through normal GB connection (such as MESSAGE or TURNTO) - this commands are necessary for more complex BMLActs that would feature synchronization of speech with gesture or with gesture and some simple movement. works in two modes - good and evil. In evil mode, when some BMLAct is executed, the module will take completely over the Bot control (logic will freeze, or no commands could be run by logic). Logic is resumed after the act is finished. In good mode, when some BMLAct is executed, the logic is not stopped. It can ask the module if the gesture is still running. The event BMLAct finished is called when the act is finished. When some other command is run by the logic when we are executing the Act, we will interrupt the BML act and perhaps we can generate some ActInterrupted event…

Modules descriptions

You can add/change the description of proposed modules. The image source can be found in the SVN repository in \branches\devel\docs\documentation\architecture\media\ - UT2004Agent.svg.

Questions: Where and how will be implemented good old Pogamut methods?

- prePrepareAgent()

- postPrepareAgent()

- shutdownAgent()

The protocol HandShake is a bit messy in our core (as Jakub noted), what we will do with it?

How we should store packages in the agents - body, sensors, memory, worldview - what should be the hierarchy?

Ondra they should be as independent as possible.

General remark. There are too many methods/commands for a beginner, I would do almost everywhere sort of SimpleMemory, SimpleCommands, SimpleNavigation etc. and create a few (lets say 2-3) bots using strictly those interfaces. So the beginner won't be overwhelmed and disgusted by a hundred of methods he should know about.

Commands module

- in fact two modules - one for beginners, one for experts

- ISimpleCommands

- basic movement/shooting/etc. commands - suggestion below in List of commands

- for beginners

- ICommands

- all possible commands even those from simple commands, so user does not have to remember, where are which commands

- usage: agent.commands.command(parameters);

- There will be body.act(ICommand) - all commands can be executed this way (as defined in XMLs)

- NEW: Commands are being implemented. See them in repository. package cz.cuni…bot.commands

- SimpleLomotion

- MoveTo(Location) - simple RUNTO command, input anything that implements ILocated or is location.

- Stop() <SC> - stops the bot immediately

- TurnHorizontal(amount) - roll is not used for bots.

- TurnVertical(amount)

- TurnTo(Location) - target can be again object or location…

- Jump() <SC>

- Run() - disables walking

- Walk() - enables walking

- AdvancedLocomotion

- StrafeTo(Target,Focus) - now Focus can be both object or location - Should we create two strafe methods, or allow all of this in one?

- MoveContinuously(Speed) - issue CMOVE command

- Rotate(Axis, Amount) - which axis and how much. Can be divided into two methods RotateHorizontaly and Verticaly

- DoubleJump() <SC>

- Crouch() <SC> X Uncrouch() <SC>

- setSpeed(double) - sets speed.

- SimpleShooting

- Shoot()

- StopShoot()

- ChangeWeapon()

- StopShoot()<SC> - stops shooting.

- AdvancedShooting

- ShootPrimary(Target) - Target - anything ILocated. Split into ShootPrimary, ShootSecondary ?

- ShootSecondary(Target)

- Shoot(Target, Mode, Time) - how long we should be shooting

- ShootCharged(Target,Mode) - for charging weapons… Probably is the same as above command with special settings

- ChangeWeapon(Weapon)<SC> - Weapon in inventory with correct inventoryId

- ChangeWeaponToBest()<SC> - issues UnrealScript command.

Jakub Gemrot- Shoot(Target,mode) - I don't want to write true/false every shoot command

- ChangeWeaponToBest() is sort of magical - it's hard to explain to beginner in details but I don't see other option here

Radim Vansa- Would be possible to use ChangeWeapon(something.suggestWeapon()) method? Is there in UnrealScript only command to change to best weapon, or is there any way to ask before changing to it? Another question is what should be the “something” - some module that should collect info about weapons with simple default implementation suggesting regardless of the attributes passed?

- Action

- ThrowWeapon() - throws current weapon out. Should be here or in other?

- Respawn()

- startAdrenalineCombo(String)

Ondrej Burkert- ? UseItem() - runs animation on item? decrease its quantity by one, destroys item?

- ? UseItem(amount) - decreases its quantity by amount - to be able to eat apples, etc…

- ? AddInventory(Inventory) - adds inventory to the bot.

MichalBida- shouldn't this be in inventory?

- Configure commands

- Init(attributes) - sends init command. Should be either here or in no cathegory - not sure.

- we already have configure command that is easy to use (nobody would ever to write true/false combination there as you usually do not know what all those fields means!) instead you may use body.act(new Configure().setWalk(true).setAutoTrace(false)); notice the sequence of setters

- Communication

- SendMessage(Global, Text)<SC> - global or team message.

- SendMessageTo(UnrealId, Text)<SC> - private message

- SendTextBubble(Text)

- SetDialog(UnrealId,DialogId,Text,Option0-Option9) - sets dialog by bot for a player who has the bot selected

Ondrej Burkert- How about a new module ICommunication? which will give some sort of message handling capabilities? Thought I have no exact idea about what it should do exactly.

- SendMessageBubble(Text) - shows some bubble over the agent with a message - I am not sure, whether this is possible, I just remember that Michal said something about such a feature.

Michal BidaYes, this is possible by simply issuing MESSAGE command with correct attributes - GB API.

IRayTracing

- - decided to make a module from this

- StartAutoTrace() - issues configure command, but should be here also.

- StopAutoTrace() - issues configure command, but should be here also.

- AddRay(ray attributes) - adds ray to ray tracing

- ConfigureRay(id, attributes) - configures ray, includes some Id check, so we know that the ray exists.

- RemoveRay(RayId) - removes ray from ray tracing

- RemoveAllRays() - removes all rays from ray tracing

- FastTrace(ray attributes) - issues fasttrace command

- Trace(ray attributes) - issues trace commands

- getIsRayTracing() - on/off

- getAllRays()

- getLastRayResult(rayId) - for one ray

- getAllRayResults(rayId, Time) - for one ray, since what time

- getLastRaysResults() - for all rays

- getAllRaysResults(Time) - for all rays

- getHasIssuedTrace/FastTraceCommand()

- getLastTraceResult(Id)

- getLastFastTraceResult(Id)

- getAllTraceResult(Time)

- getAllFastTraceResult(Time)

INavigation

- protected IMovementManager;

- protected IPathPlanner;

- PathPlanner will include the IMap object inside as it will be the source of information about the map and MovementManager will be responsible for steering. dodging etc … (the interfaces are already in the devel branche) . The moveAlongPath method will use default implementations of these interfaces underneath.

- part of implementation is a path manager and path finder (interfaces to give a way to define own pathfinding, by default GB)

- this module will be included in IMap, or Imap should be included in INavigation. How we will do this?

- Methods

- safeRunToLocation()

- GetPath(Target) - target can be location or Ilocated.

- RunAlongPath(Id of path)

- RunAlongList(list of Ilocated objects) - will request paths and do everything automaticaly

- ReachCheck(Target) - anything Ilocated.

- GetPath()

- RunAlongListOfILocated(List)

- nearestWeapon(type)

- nearestXY()

- visibleSomething() - this should be also in memory. right?

- or seeSomething(type) - this should be also in memory. right?

IMap

- the IMap object should be exclusively a data object (navpoints, objects and relations between them) without any additional logic. The logic will be provided in another objects working upon the IMap (eg. INavigation module)

IListeners

- added through public enum Messages - all available

- AddListener(Message,…)

- AddListeners(List<Message>,…)

ISensors

- See - what we actualy can see.

- seeItem, Items - make also methods for each item cathegory, or just seeItem(Cathegory) ?

- seePlayer, Players

- seeMover, Movers

- seeProjectile, Projectiles

- seeFlag, Flags (?

)

) - seeNavPoints, NavPoints

- seeObject(Cathegory) ? - cathegory anything we can see - players, items, navpoints…

- getting information should be in format: get[object]LastInfo(objectID) - for all objects above

- Self + something like getPreviousSelfBatch() and getAllSelfBatches(Time)

- getId()

- getName()

- getTeam()

- getIsDriving() - is in vehicle

- getLocation()

- getRotation()

- getVelocity()

- getIsShooting()

- getIsShootingPrimary()

- getIsShootingSecondary()

- getWeapon()

- getWeaponHasSecondaryMode() ?

- getPrimaryAmmo()

- getSecondaryAmmo()

- getAdrenaline()

- getHealth()

- getArmor() - upper and lower altogether

- getUpperArmor()

- getLowerArmor()

- getHasCombo()

- getCombo()

- getHasUDamage()

- getIsCrouched()

- getIsWalking()

- getIsInAir() or getIsFalling() - but may be just jumping…

- getFloorNormal()

- getFloorLocation()

- getHasFlag() - for CTF

- ItemOthers - doesn't fit or dunno where to put it

- getGameInfo()

- getScore(Player)

- getMyScore()

- getAllScores()

- getReachCheckResult(Id) - reach results to events?

- getLastReachCheckResult() - reach results to events?

- getAllReachCheckResults(Time)

- getLastPingResult() - ping to events?

- getAllPingResults(Time)

- isFlagStolen() - for CTF

- getDomPointsStatus() - for DOM

IMemory

- Known/Seen - what we actualy know (position of items, last position of players…) and what we have seen already

- seenItems

- seenPlayers

- seenNavPoints

- seenMovers

- seenFlags

- knownItems - exported at the beginning

- knownPlayers - dunno what this should be… ideas? :)

- knownNavPoints - exported at the beginning

- knownMovers - exported at the beginning

- knownFlags - exported at the beginning

Jakub Gemrot- I would vote to abandon this seen/known completely as it is easy to obtain it from IWorldView!

MichalbIf there will be some nice way to do it, why not…

- Events - events that happened to us recently, or that can happen

- Will be solved with public static enum Events;

- three methods:

- hasReceivedEvent(Events, Time)

- getLastEvent(Events)

- getAllEvents(Events)

- Was:

- isThreatenedByProjectile() - projectile that can possibly hit us

- hasPickedUp()

- hasNewInventory() - should this be here?

- hasHit() - Hit message

- hasCollided() - to wall, WAL message

- hasFallen() - FAL message

- hasHearedNoise - HRN or HRP message

- hasReceivedMessage - MESSAGE

- hasZoneChanged() - zone change message

- getThreateningProjectile() - or Projectiles ? can be more of them

- getLastPickup()

- getLastNewInventory() - should this be here?

- getLastHit()

- getLastWallCollision()

- getLastFallLocation()

- getLastMessage()

- getLastNoise()

- getLastZoneChange()

- getLastKill()

- getLastMyKill()

- getLastDeath()

- getAllHits(Time)

- getAllThreateningProjectiles(Time)

- getAllPickups(Time)

- getAllNewInventory(Time) - should this be here?

- getAllWallCollisions(Time) - since that time return all wall collisions we heared

- getAllFalls(Time) - since that time return all falls we heared

- getAllMessages(Time) - since that time return all messages we heared

- getAllNoises(Time) - since that time return all noises we heared

- getAllZoneChanges(Time)

- getAllKills(Time)

- getAllMyKills(Time)

- getAllDeaths(Time)

Jakub Gemrot- those kinds of methods are a bit of a mess … what could be better is to have a wrapper for event-listener that would provide this getLast() / getAll() / getBetweenTime(start, stop) methods so the user will initialize those wrappers only to events he/she is interested in

IGestures

- Should provide a way to make the bot animate our custom defined gestures

- Will have to pause the bot - stop moving, turning - this can be added as a precondition or it can be forced. Then we will start to run the animation, when it is ready we will let the logic know. Should we pause the logic when the animation is executing? Should the user handle animating more in logic? Or should we handle it here?

- ExecuteAnimation(Animation)

- ExecuteAnimation(List<Animation>)

- IsAnimating()

- StopAnimation()

IInventory

- listener on IPK message - it figures out the item type and executes corresponding action (adds ammo, adds weapon, fires listeners)

I would give a possibility to add listeners for Weapon, Ammo, other events:

- addItemEventListener()

- addNoAmmoEventListener()

- addNewWeaponListener()

- TODO: other possible inventory related events

- Methods

- UseItem(Id)

- getInventory(Id)

- getAllInventoryCategory(Category)

- getAllInventory()

- getAllWeapons()

- getCurrentWeapon() - implemented in memory Self.

- hasLoadedWeapon()

- getLoadedWeapons()

- getBestLoadedWeapon()

- getWeaponProperties(Weapon)

- getWeaponFiringModes(Weapon)

- getWeaponFiringModeRange(Weapon,Mode), Splash(), Damage() … all info we will know!

- setAutoRearming(boolean value) - when set to true, bot will rearm automatically when out of ammo

- switchToBestWeapon() - basic command, implemented in commands if uses UnrealScript reasoning

- switchToBestWeapon(Player enemy) - some basic reasoning about distance and available weapons - just to create some example of reasoning

Ondra- switchToBestWeapon(Player target) should be implemented in one of example bots… people don't dig in implementation of something, but they study a lot example bots. (just an impression from the week of science)

- destroyItem(id) - destroy item in inventory, can be named useItem or something

User defined modules

Episodic Memory / Emotions / etc. We can clearly see on the picture where can our kind user put them:).

Remote Control

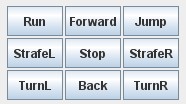

- What buttons should remote control have (picture is just a shot)

- Has move forward a bit - ~ 200 ut units

- Move backward a bit - ~ 150 ut units (value should be different from move forward - that way we can set the bot better to desired position)

- Strafe Left, Right ~ 100 ut units

- Turn Left/Right ~ 4000 ut units (rotation units)

- Stop and Jump button

- Run button - can work in two ways - sends CMOVE command when pushed and STOP when released or (i think better) sends just CMOVE and to Stop the bot, STOP button has to be pressed.

- PauseLogic button

- SendCommand field and button - for directly sending text command to GB!

- What about recording sequence of commands from remote control for a bot and then store them somewhere?

- Add TOGGLE buttons, that would be kept down and issue some command (MOVE,TURN,ETC.)

- RemoteControl would force pause logic for a certain time - let's say 1 second to let the bot finish the command sent by a remote control. It is more confusing without it. User tries to move a bot but he seems not responding - in fact he is just overriding every user's command before it can even be executed.